- cross-posted to:

- android@lemdro.id

- cross-posted to:

- android@lemdro.id

See, it turns out that the Rabbit R1 seems to run Android under the hood and the entire interface users interact with is powered by a single Android app. A tipster shared the Rabbit R1’s launcher APK with us, and with a bit of tinkering, we managed to install it on an Android phone, specifically a Pixel 6a.

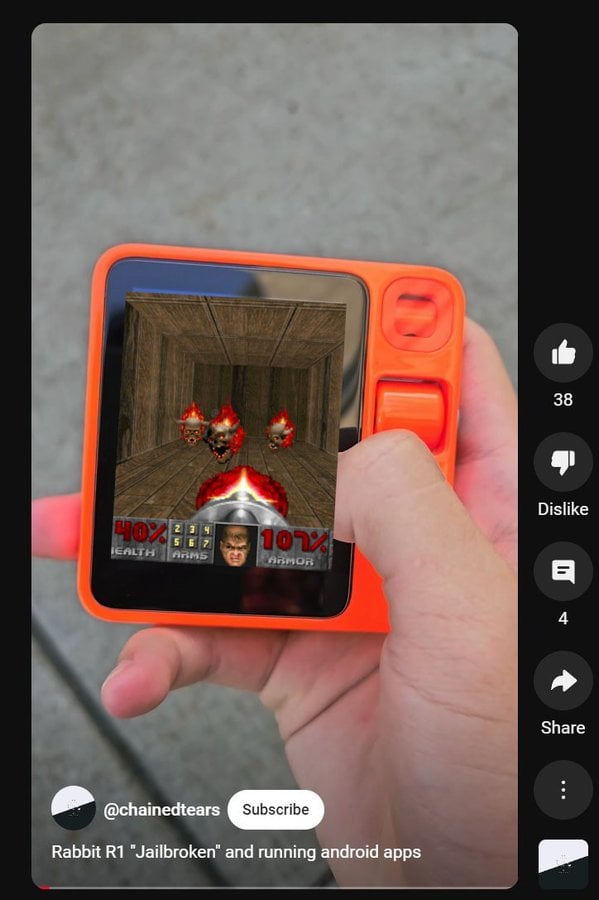

Edit: Someone also got doom and Minecraft running on this thing

It needs to be separate hardware because Google and Apple have a strangle hold on their respective OSes. No way in hell Apple/Google would give a random app deep integration with AI. Although not currently present, it seems like Rabbit (and Humane) want to give a ton of control over the system, data, and apps to the AI.

All of the apps on the rabbit run in the cloud anyway, as well as the AI bits. Nothing is running locally on the device. There’s nothing the rabbit device does that couldn’t be done via an app or web portal to those cloud services instead.

At least with the Humane AI Pin it was an attempt to create a new class of device. The rabbit r1 however is effectively just an oddly shaped Android phone locked to running a single app. The only reason it seems to exist is to allow an existing hardware company to jump on the AI bandwagon.

Currently, the Rabbit does 2 things for me that can’t be an app on my phone.

It’s not my phone. I value this enough to pay for it. I spend more time than I would like on my phone. I’m happy when I can use another single purpose device to help me stay focused.

The push to talk hardware button has been more pleasant for me to use than the ChatGPT shortcut on my Pixel phone.

In the end, the ChatGPT + Perplexity in a box fills a space in my life that I can’t find anywhere else—given my criteria.

I understand your criteria is different and you value different things. That’s ok. It just means this device isn’t for you.

What would prevent an Android app from having “deep integration with AI”? If the AI is in the cloud then it’s all done through normal web requests, which don’t even require a permission, let alone so special allowance from Google.

Even now they’re already leveraging their OS-level control. The Android Authority guys said in their report, “the Rabbit R1’s launcher app is intended to be preinstalled in the firmware and be granted several privileged, system-level permissions — only some of which we were able to grant”. I don’t work at Rabbit, so I don’t know exactly what modifications they’ve done to their AOSP fork, but they’re doing something.

If I had to guess, I’d say they’ve messed with the power management of AOSP and probably the process scheduling somehow? I say this because the Rabbit R1 is hands down the fastest way to access an assistant that I’ve used. I have a ChatGPT shortcut on my homescreen of my Pixel 8 phone and the ChatGPT app is constantly killed in the background, so often times I go to access the assistant but I have to wait for an app to load. The R1 is instant.

And that’s without counting the time it takes to face or fingerprint unlock the phone, then tap an icon.

No, I would have not paid $200 if Rabbit was an app. I have ChatGPT and Perplexity on my phone, I don’t like the experience compared to the R1. I paid $200 for the end to end Rabbit experience.

Btw, I get that some people don’t mind unlocking their phone, tapping an icon, waiting for it to load, asking a question, then getting an answer. That’s fine. If you’re happy with that experience, then the Rabbit R1 is not for you.

You brought up advantages of it being a device, which I don’t disagree with. Nothing you said explained the “allow deep integration with AI”. That’s the only part I was questioning.

Makes sense, they can do whatever they want with AOSP as long as they don’t want to cert it as ‘Android’ to have access to Play Store.

One amendment, I’d say it’s because existing phones won’t let an app have access to listening for a wake word or phrase, and a phone hard codes that to the phone vendor code. Having passive access to microphone and camera and activating and showing what they want to the screen without contending with a platform lock screen that won’t play ball with them, that sort of thing. “AI” access wasn’t really going to be the challenge.

It’s not that they didn’t run on existing phones, I could see that, I find it more stupid that they stopped short of just making their device a phone capable of traditional interaction. As it stands it’s going to be a subset of capability of phones coming out this year that will likely offer similar “AI” features while also continuing to support traditional hand held usage. If they didn’t want to sign up for all that, they probably could have teamed up with someone like Motorola, who might be hungry enough to let Rabbit do their thing on a Moto G variant or something.

Apps can absolutely listen for a wake word tho