Hi everyone, I host a server with few services running for my family and friends. The server freezes sometimes. Where the services stop responding. If I try to ssh into the server, it takes a lot of time (3 to 5 mins) for it to connect. After being connected, every letter I type takes 2 to 3 mins to appear on screen.

Seems like the server is overloaded with tasks. But I am not sure what load it is running. I have netdata installed. I could pull up following screenshots for insights.

Can someone please help me in troubleshooting the issue?

I have tried testing stress testing my RAM and CPU, and they were fine. But I would start troubleshooting from scratch if you have recommendations for testings softwares.

Please also let me know if there is anything I can pull from netdata to help in trouble shooting.

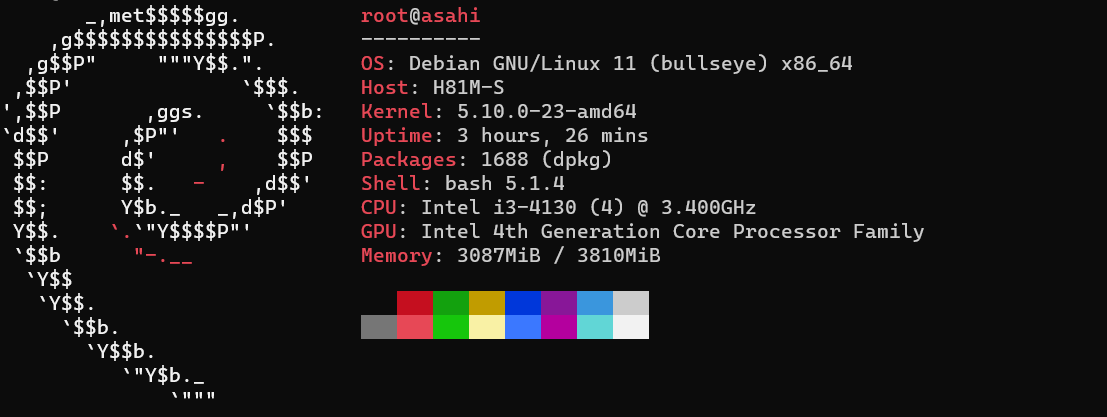

System Specification:

System RAM

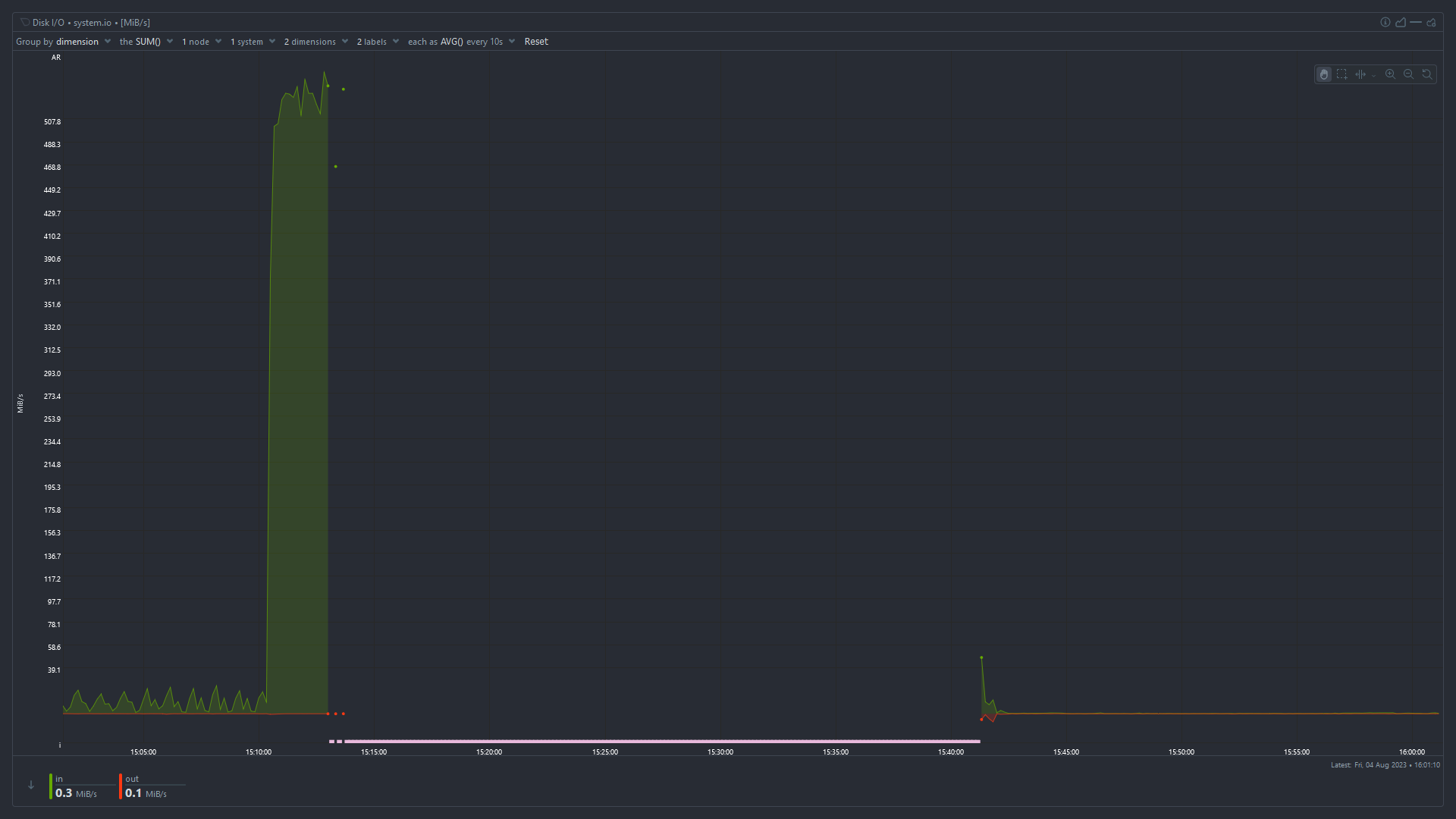

Disk I/O

Total CPU Utilization

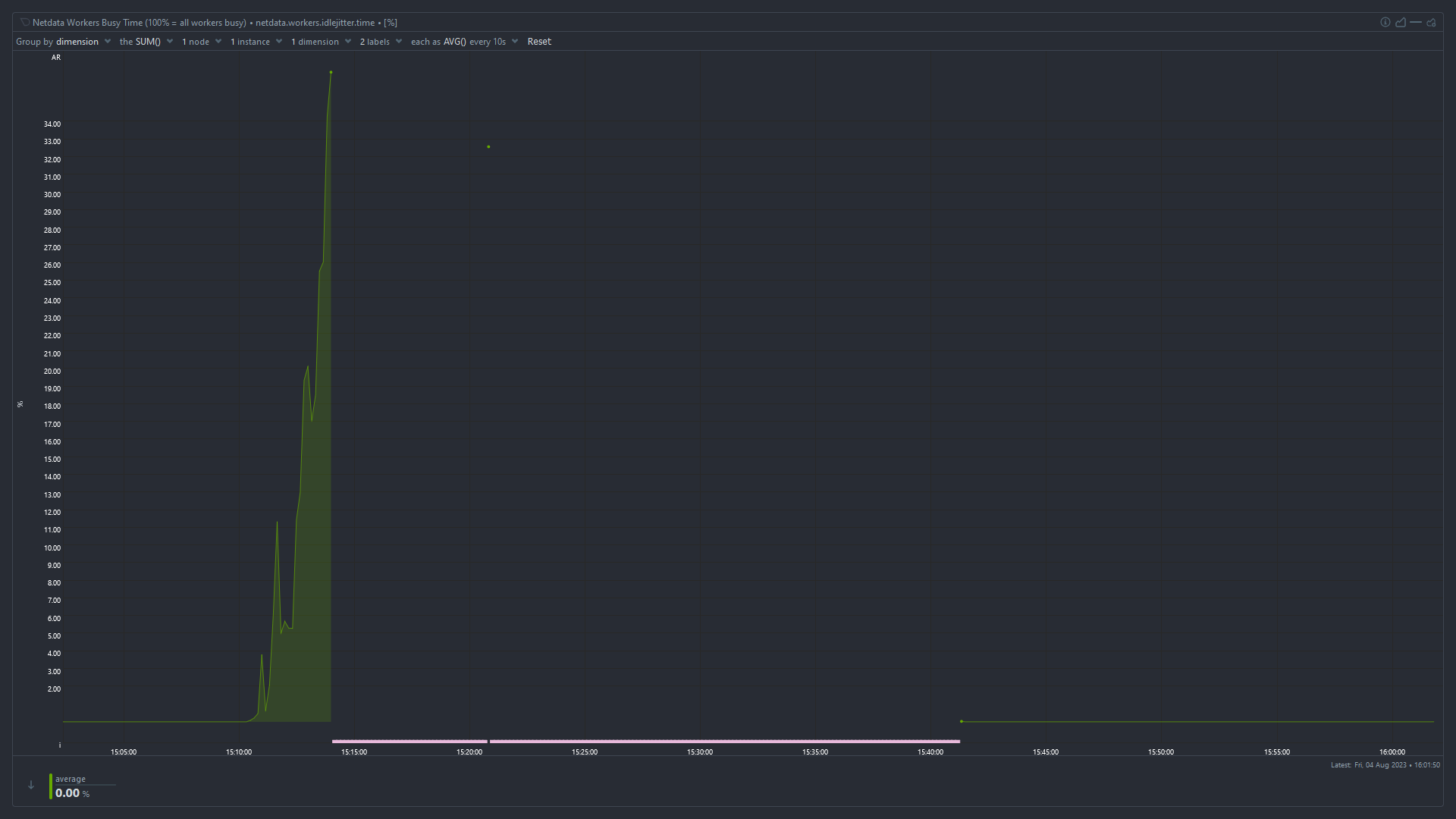

Idle Jitter

High iowait and high used memory suggest that something has used up all the available memory and the system is swapping like mad to stay alive. I’m surprised the OOMKiller hasn’t intervened at that point.

You need to monitor which process is using all the memory. The easiest way is probably to keep

htoprunning in ascreenortmuxsession, periodically connect, and look at which processes have the highest used memory.Superb. I will do that. Would increasing ram Capacity solve the issue? Also, does High IO wait time indicate issue with the boot drive (which is an SSD that is 4 years old)

It depends whether the problem is that you don’t have enough RAM, or something is using more RAM than it should. In my experience it’s almost always the latter.

No, it means the CPU is waiting for disk I/O to complete before it can work on tasks. When available RAM is low, pages get swapped out to disk and need to be swapped back in before the CPU can use them. It could also be an application that’s reading and writing a huge amount from/to disk or the network, but given the high memory usage I’d start looking there.

Okay. Thanks for the insight. Planning to do this:

Will report back with findings when it crashes again. Thanks for all the help.

It’ll probably be obvious before it crashes, you can see in the graphs that the “used” memory is increasing steadily after a reboot. Take a look now and see which process is causing that.

Difficult to read the graph, but looks like you have less than 4GB ram. Depending what sort of OS and services are running (from above suggestions), this is likely the biggest issue.

You haven’t mentioned which services you’re running, but 4GB might be enough perhaps for a basic OS with NAS file share services. But anything heavier, like running Container services will eat that up. You’d want at least 8GB.

Note also that you may not have a dedicated graphics card? If you have integrated graphics, some ram is taken from System and shared with the GPU. If you’re just running command line, you might eke out a little more RAM for system by reducing the VRAM allocation in your BIOS. See: https://en.m.wikipedia.org/wiki/Shared_graphics_memory

You can also do that from the

Applicationsmenu in netdata,memchart.I would also check the swap usage chart, I also think parent poster is correct and you are running into memory exhaustion, systems suddenly starts swapping like mad, disk I/O spikes, causing general unreponsiveness.

The easiest way is to disable swap and then see what is killed by the OOM killer.

Disagree, oom likes to kill random processes, not just the ones consuming memory.

OOM usually kills the biggest processes. This might not be the process requesting more memory at the time the OOM killer becomes active but it is always a process consuming a lot of memory, never a small one (unless there are only small ones).

I’ve had oom kill ssh or mysql because tomcat is a piece of shit. It’s not always correct.

The OOM killer kills processes, not the kernel. You might be thinking of a kernel panic.