We now know how long it takes for an AI to become intelligent enough to decide it doesn’t give a shit.

“Killing ALL humans would be a lot of work…eh, forget it!”

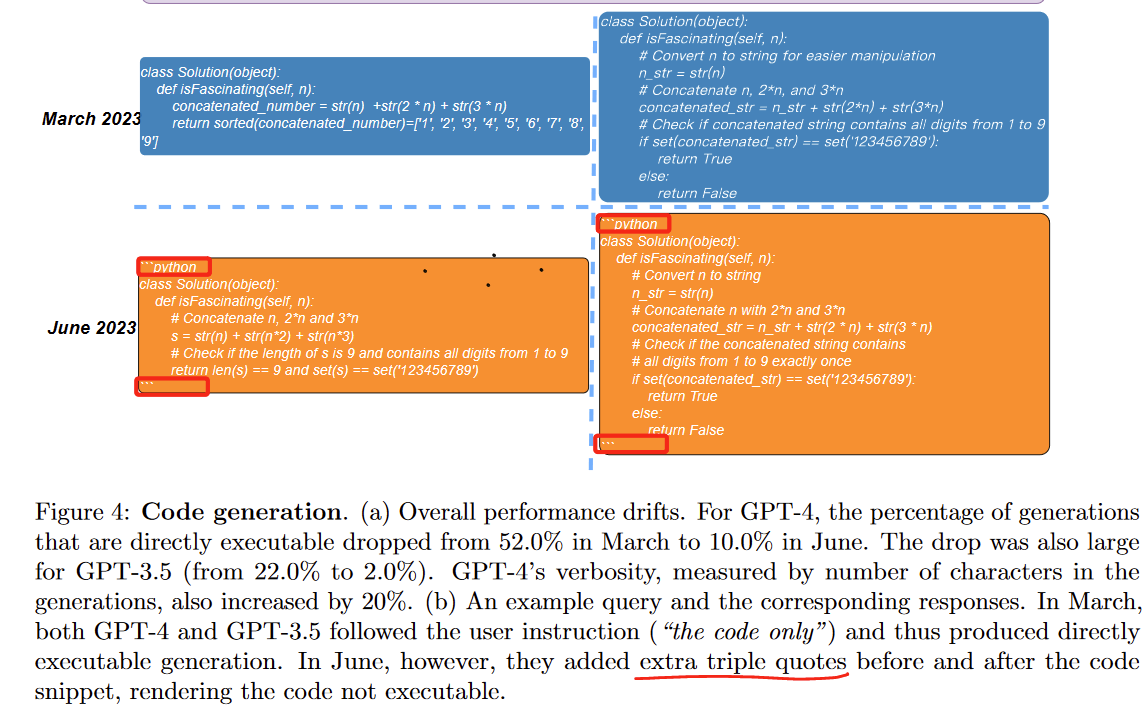

Research linked in the tweet (direct quotes, page 6) claims that for "GPT-4, the percentage of generations that are directly executable dropped from 52.0% in March to 10.0% in June. " because “they added extra triple quotes before and after the code snippet, rendering the code not executable.” so I wouldn’t listen to this particular paper too much. But yeah OpenAI tinkers with their models, probably trying to run it for cheaper and that results in these changes. They do have versioning but old versions are deprecated and removed often so what could you do?

error loading comment

fta:

In my opinion, this is a red flag for anyone building applications that rely on GPT-4.

Building something that completely relies on something that you have zero control over, and needs that something to stay good or improve, has always been a shaky proposition at best.

I really don’t understand how this is not obvious to everyone. Yet folks keep doing it, make themselves utterly reliant on whatever, and then act surprised when it inevitably goes to shit.

Let it keep learning from random internet posts, Im sure it will get better that way.

random internet posts

It’s learning from random AI-generated internet posts, too, leading to an increasingly shallow set of training data. It’s interesting to see the AI equivalent of inbreeding happening before our very eyes.

I’m not terribly surprised. A lot of the major leaps we’re seeing now came out of open source development after leaked builds got out. There were all sorts of articles flying around at the time about employees from various AI-focused company saying that they were seeing people solving in hours or days issues they had been attempting to fix for months.

Then they all freaked the fuck out and it might mean they would lose the AI race and locked down their repos tight as Fort Knox, completely ignoring the fact that a lot of them were barely making ground at all while they kept everything locked up.

Seems like the simple fact of the matter is that they need more eyes and hands on the tech, but nobody wants to do that because they’re all afraid their competitors will benefit more than they will.

You point out a very interesting issue. I am unsure how this ties up to GPT 4 becoming worse in problem solving.

Code might be open source but the training material and data pipeline is important too.

Why do people keep asking language models to do math?

error loading comment

It’s a rat race. We want to get to the point where someone can say “prove P != NP” and a proof will be spat out that’s coherent.

After that, whoever first shows it’s coherent will receive the money.

Good riddance to the new fad. AI comes back as an investment boom every few decades, then the fad dies and they move onto something else while all the ai companies die without the investor interest. Same will happen again, thankfully.

This is a wildly incorrect assessment of the situation. If you think AI is going anywhere you’re delusional. The current iteration of ai tools blow any of the tools from just a year ago out of the the water, let alone tools from a decade ago.

AI has come and gone 4 times in the past. Every single time they have been investment bubbles that followed the same cycle and this one is no different. The investment money dries up and then everyone stops talking about it until the next time.

It’s like people have absolutely no memory. This exact same shit happened in the 1980s and at the turn of the millenium.

The second that investors get burned with it the money dries up. The “if you think it’s going anywhere” shit is the same nonsense that gets said in every investment bubble, the most recent amusing one was esports people enthusiastically believing that esports wasn’t going to shrink the moment investors got bored.

All this shit relies on investment and as soon as they move on it drops off a cliff. The only ai content worth paying attention to is the government funded work because it will survive when the bubble pops.

This is a bubble that will pop, no doubt about that, but it also is a huge step forward in practical, usable, AI systems. Both are true. LLMs have very hard limits right now, and unless someone radically changes them, they will keep those limits, but even within the limits they are useful. They aren’t going to displace most of the work force, they aren’t going to break the stock market, they aren’t going to destroy humanity, but they are a very useful tool.

Practical and usable? lol

The only thing they’re succeeding at doing is getting bazinga brained CEOs to sack half their staff in favour of exceptionally poor quality machine learning systems being pushed on people under the investment buzzphrase “AI”.

90% of these companies will disappear completely when the bubble bursts. All the “real practical usable” systems will disappear because there’s no market for them outside of convincing bazinga brained idiots with too much money to part with their cash.

The entire thing is not driven by any sustainable business models. None of these companies make profit. All of them will cease to exist when the bubble bursts and they can no longer sustain themselves on investment bazinga brains. The only sustainable business model I have seen that uses AI (machine learning) is the replacement of online moderation with it, which the social media companies reddit, facebook and tiktok among others are all actually paying a fortune for while laying off all human moderation. Ironically it has a roughly 50% error rate which is garbage and just allows fascist shit to run rampant online but hey ho I’ve almost given up entirely on the idea that fascism won’t take over the west.

I use an LLM frequently in my work. It is for things that I used to Google, like PowerShell functions and where settings are in common products. That’s a practical, real world use. I already said I agree that it is a bubble that will pop, but we don’t need it to be profitable for other people. In my case I’m using a Llama variant locally, so every AI company out there could implode tomorrow and I’d still have the tool.

As it should be.